Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

0:01

Support for the show comes from Chevrolet.

0:04

Artificial intelligence, smart houses, electric vehicles, we

0:06

are living in the future. So why

0:08

not make 2024 the year you go

0:11

fully electric with Chevy? The

0:13

all-electric 2025 Equinox EV LT

0:15

starts around $34,995. Equinox

0:20

EV, a vehicle you know, valued you'd

0:22

expect, and a dealer right down the

0:25

street. Go EV without changing a thing.

0:28

Learn more at

0:30

chevy.com/equinoxev. The manufacturer's

0:32

suggested retail price excludes tax, title,

0:35

license, dealer fees, and optional equipment.

0:37

Dealers sets final price. Support

0:42

for this show comes from Mercury. Business

0:45

finances are complex, and the solution

0:47

isn't what you think. It's

0:49

not another tool or even a secret.

0:52

It's the bank account, the one thing

0:54

every business needs. Mercury

0:56

simplifies your financial operations with powerful

0:58

banking, corporate credit cards, bill pay,

1:01

invoicing, and more, all in one

1:03

place. Apply in

1:05

minutes at mercury.com. Support

1:11

for this show comes from Amazon Business. We

1:14

could all use more time. Amazon

1:17

Business offers smart business buying solutions, so

1:19

you can spend more time growing your

1:21

business and less time doing the admin.

1:24

I can see why they call it smart. Learn

1:26

more about smart business buying at

1:28

amazonbusiness.com. Hi,

1:44

everyone. From New York Magazine and the Vox

1:47

Media Podcast Network, this is On with Kara

1:49

Swisher, and I'm Kara Swisher. Last

1:51

week, on a muggy evening in New

1:53

York City, I visited the legendary Paris

1:55

Theater on 58th Street to interview the

1:58

also iconic Bill Gates about his. latest

2:00

Netflix docu-series. It's

2:02

called What's Next, the Future with Bill Gates, and

2:04

it's about some of the biggest issues we face

2:06

at the moment. AI, disinformation,

2:08

global health, the wealth gap, and

2:11

how technology could help or

2:13

not. It's a funny and

2:15

substantive series by Morgan Neville who

2:17

did docs like Won't You Be

2:19

My Neighbor about Mr. Rogers, Roadrunner

2:21

about Anthony Bourdain, and 20 Feet

2:23

from Stardom, which garnered him an

2:25

Oscar. It's a good match

2:27

since Neville got Gates to actually use his

2:29

own experiences as case studies in some of

2:32

the episodes. It's surprisingly intimate actually,

2:34

and I actually really liked it. I didn't think

2:36

it would be as good as it was, and

2:38

it really is, especially for people who don't know

2:40

a lot about these topics. It

2:42

was also great to be back on stage with Bill,

2:44

whom I've interviewed many times, including an

2:47

historic interview with the late Steve Jobs.

2:49

While we've had plenty of disagreements over the

2:52

three decades I've covered Microsoft and also Bill

2:54

Gates, I took the opportunity to dig a

2:56

bit deeper and ask him about his interests

2:58

and investments into many of these areas, including

3:00

nuclear power, how he would handle attacks on

3:02

the rich, and the outcome

3:05

he's hoping for in the upcoming

3:07

presidential election. Plot spoiler,

3:09

he implied, referencing climate change deniers

3:11

that he's voting for Vice President

3:13

Kamala Harris, although he certainly didn't

3:15

make an endorsement. I hope

3:18

you enjoy it, and by the way, we love

3:20

that you're listening, and it's even better if you

3:22

hit subscribe to follow the show to

3:24

get even more exclusive conversations like this

3:26

in your feed every Monday and Thursday.

3:29

So let's get to it live from

3:31

the Paris Theater. ["The Paris

3:34

Theater"] Mm,

3:40

mm, mm. Hi,

3:43

everybody. How you doing?

3:45

I used to come to this theater as a

3:47

kid. It's fantastic to be here, owned

3:49

by Netflix. Wow, that's something. Anyway,

3:52

thank you for coming. It's

3:54

a little lightness here, inequality,

3:56

AI, climate change, disease.

4:00

what else are we talking about? But at least

4:02

we're not Eric Adams, are we? Okay, I had to

4:05

do that. Oh, come on. Anyway,

4:09

I love New York. Anyway,

4:11

I'm very excited actually to talk to Bill. I've interviewed

4:13

him a dozens of

4:15

times over the many years. We

4:17

first met when he came pouring

4:19

out of a cab in very sweaty,

4:21

summery Washington, DC. I thought

4:23

he had arrived in the limo but it

4:25

was some other guy and he just got

4:27

out of a DC cab and did an

4:29

amazing interview with the Washington Post editorial board

4:33

and came himself and everything else. So

4:35

that's where we started. I've covered Microsoft

4:37

for years and talked

4:40

to him a number of times. We've agreed, not

4:42

that much, but disagreed a lot. But also I'm

4:44

really, I think this phase of his

4:46

life is really interesting and he really is an

4:48

information sponge curiosity and he

4:51

loves to take apart problems and

4:53

I think what he's

4:56

done around disease and other things is really interesting.

4:59

Last interview we did was about climate change, which

5:01

is a book that he wrote and

5:03

it's actually coming true today, a lot of the stuff

5:06

he was talking about. So without further ado, Bill Gates.

5:19

We meet again. So there's

5:23

so much to talk about. I think what we're going to do is

5:25

we're going to throw to some clips and then talk about them. We

5:28

literally have to rush through this stuff because we've only

5:30

got about 40 minutes to get through all these major

5:32

topics and you could do hours on each of these

5:34

things. But let's start with AI.

5:36

Let's go to the clip to start with and

5:38

you're talking to James Cameron. Me

5:41

as a innovation can solve

5:43

everything type person says, oh, thank goodness.

5:45

Now I have the AI on my

5:47

team. Yeah, I'm probably more of a

5:49

dystopian. I write science fiction. I wrote

5:52

the Terminator. Where

5:54

do you and I find common ground around

5:56

optimism? I think is the key here. I

5:58

would like the message to be, be balanced

6:00

between this longer-term

6:03

concern of infinite

6:05

capability with the

6:07

basic needs to have your health

6:10

care and care of to learn,

6:12

to accelerate climate innovation. Is

6:15

that too nuanced a message to say that AI

6:18

has these benefits while

6:20

we have to guard against these

6:22

other things? I don't think it's too nuanced at

6:24

all. I think it's exactly the right degree of

6:26

nuance that we need. I mean, you're a humanist,

6:28

right? As long as that humanist

6:31

principle is first and foremost, as

6:33

opposed to the drive to dominate market

6:35

share, the drive to power, if we

6:37

can make AI the force for good

6:39

that it has the potential to be,

6:41

great. But

6:44

how do we introduce caution? Regulation

6:47

is part of it, but I think

6:49

it's also our own ethos and our

6:52

own value system. So as

6:54

a perfect person, the creator of the Terminator, which I

6:56

think has informed a lot of people about AI, the

6:58

idea that it's here to kill us, essentially. And

7:02

the first person I talked about AI with was

7:04

Elon Musk, actually, who was quite worried about it.

7:07

At the time, he thought it was here to

7:09

kill us. Later, he evolved it into us treating

7:11

us like house cats. Then

7:13

he said we were like anthills, that the highway

7:15

will come through and just cover us, et cetera.

7:17

Always a happy day with Elon Musk. But

7:21

when I was watching this, I watched it again on the train

7:23

up here. It's advanced quicker

7:25

than anyone, including yourself, had

7:27

thought, right? That it's moved quicker. And you talk about

7:29

this. And it's a very good explainer in the film.

7:32

But I want you to sort of unpack

7:34

why you're still very sunny about it, I

7:37

would say. And I think you lean towards

7:39

the possibility. So talk a little bit about

7:41

that. Well,

7:44

the idea that computers

7:46

would eventually become intelligent and

7:48

do human-like things, as

7:50

soon as you learn how to program, you're

7:53

thinking, wow, what can't

7:55

software do? And why

7:57

not? And

7:59

so, prosaic. things like visual

8:01

recognition, speech recognition, seemed

8:03

out of reach. I was age 15

8:06

when I saw this Stanford, SRI, shaky

8:08

the robot video, and I thought, wow,

8:10

we are getting close, look at that.

8:13

Shaky almost can do these things.

8:15

So it has been mostly

8:19

disappointing when the

8:21

neural nets came in, then the

8:23

prosaic stuff, the speech and video

8:25

got strong. But even then, the

8:28

idea of representing knowledge,

8:30

being able to read and write still

8:33

seemed out of reach. And I

8:35

believe that we'd have to explicitly

8:37

understand how to represent knowledge.

8:39

I didn't think that pure reinforcement

8:42

would cause this great

8:45

knowledge representation to emerge.

8:47

And even GPT-3, it's

8:49

still statistical. It's not

8:51

really a world model.

8:54

And so it's going to be

8:56

deeply flawed. But by GPT-4, that

8:59

was wrong. And so right

9:02

up there with my first demo of graphics

9:05

interface with trial and simony, I'd say that

9:07

demo of chat GPT-4 was the most amazing

9:11

demo I've ever seen. I think a

9:13

lot of people attribute the huge amount

9:15

of information we've been putting into the

9:17

internet right now has the leaping point. What

9:20

do you, because I was

9:22

at a dinner with a very smart group

9:24

of technologists and they didn't know. So

9:26

talk about not knowing, because software

9:28

is about knowing. You put

9:31

something in, you take something out. But

9:33

what do you attribute the leap to

9:35

recently? Well, there's a subtlety

9:37

to how the world gets represented

9:40

that this is captured. If

9:42

you told me to write a piece of software that

9:44

you could say, rewrite this

9:47

like Shakespeare would, or like

9:49

Trump would, or like Harari

9:51

would, this

9:53

thing, I would

9:55

have no idea how to write a piece

9:57

of software that is as fluent. as

10:00

this is. And so that's an

10:02

emergent set of capabilities that shows

10:04

that it is in a

10:06

deep, deep sense representing what it

10:08

is to write like Shakespeare. Right.

10:12

And how our algorithms generate

10:14

that is subtle enough.

10:18

And we have a lot of mathematicians

10:20

working on trying to figure out those

10:22

representations, but it does make

10:25

it harder for us to know,

10:27

okay, where is it correct? Where

10:29

is it incorrect? Right, but it's

10:31

not Shakespeare. It can write like Shakespeare.

10:33

When does it move to whatever

10:36

Shakespeare may be? That's

10:38

the, it's representing us. Well,

10:41

if your goal is utilitarian to

10:43

say, compare

10:46

humans in terms of medical diagnosis

10:48

to this ability

10:50

to look at all the medical records and

10:52

see outcomes, to look at all the latest

10:54

medical literature. To look at your sensor

10:57

data over your lifetime.

11:00

Clearly the computer is very

11:02

close and will exceed human

11:05

diagnosis capability within the

11:07

next few years. It will be superior at

11:09

a very profound

11:11

and important task. And

11:15

that's just provable that

11:17

it will be better. If you're

11:19

talking about art, okay, then, we

11:21

don't have an agreed utility

11:24

function. Why

11:26

is some art more popular than others? Okay,

11:28

that, it can't enter

11:30

that realm because there's no data

11:32

to center it on

11:35

a common metric. What then

11:37

is it lacking? I mean, you're

11:39

talking about the utility. You often

11:41

talk about healthcare. Education is another

11:43

area. Drug discovery, gene research, et

11:45

cetera, et cetera. These are all

11:47

utilities. But

11:49

a lot of the worries are about

11:51

things that aren't utilities, that it will

11:53

start to make decisions for us. Where's

11:57

the worry point that you have?

11:59

Because, obviously, often most of

12:01

the technologists talk about the positive parts about

12:03

it. And I understand why you would talk

12:05

about the negatives in the beginning, given our

12:07

past history recently. Well, there's, you

12:09

know, three things you can worry about. One

12:14

is that bad

12:17

people with bad intent will use

12:19

AIs for cybercrime,

12:22

bioterrorism, nation-state

12:25

wars. Right. In

12:28

that case, you think, okay, let's make sure the

12:30

good guys have an AI that

12:32

can play defense against those

12:34

things. And that, you know, makes you want to move

12:38

ahead and not fall behind. The

12:41

second thing you could worry about is

12:43

that the rate of change where technical

12:47

support jobs, telesales jobs,

12:51

you know, in the same

12:53

way that that medical diagnosis will be

12:55

superior with the right training set

12:57

and a few more turns of the crank on how

12:59

we drive reliability that there's

13:01

great progress on that. It

13:04

will be superior at a

13:07

telesales or telesupport type job, which,

13:09

you know, those are big

13:12

parts of the economy. And so even though you can say,

13:14

okay, that frees those people up

13:16

so we can have class size

13:18

of five and every handicapped kid

13:20

can have a full-time aide and

13:22

older people, you know, can

13:25

be engaged in social activity. We have

13:27

unmet needs for labor if it's we

13:30

free up all this labor, we can shorten

13:32

the work week, but the rate of change

13:34

is scary. And so

13:36

those are the two I worry about. The third one that

13:38

comes up a lot is the loss of control. My

13:41

view is if you manage to get through the

13:43

first two, that

13:45

actually that's not the

13:47

hardest of three. And it's actually

13:50

kind of weird to go to that

13:52

one because it's pretty far from the

13:54

future. We know that bad people will

13:56

use AI in a bad way. The

13:58

AI itself, you know, I think. I

14:00

think it doesn't care. I don't

14:02

think that's the one to obsess,

14:05

particularly given the rate that this is happening.

14:07

This is not a generational change. This is

14:10

within a 10 year

14:13

type change. Sure. One of the

14:15

things that's, when you just say loss of control, I

14:17

think some of us are loss of control to giant

14:19

companies that are gonna dominate

14:21

this area. OpenAI is raising money at

14:23

$150 billion valuation. There's

14:27

some sort of kerfuffle going on among all

14:29

the executives, but it's turning into a for-profit

14:32

corporation. It started as a nonprofit

14:34

to help humanity. Now it's gonna help humans,

14:38

a few humans, a few select humans.

14:40

Microsoft now has vaunted ahead with its

14:42

investment in OpenAI and everything else. If

14:46

there's a few corporations running this, I

14:50

would worry about that, would you? Well,

14:53

in general, although you're a big shareholder,

14:56

Microsoft. We have this thing called competition.

15:00

The main thing you want is

15:02

that it's so competitive that the improvements

15:05

go to benefit the user. That is

15:07

the quality of medical diagnosis improves the

15:09

health system, the availability of that personal

15:11

tutor that and I was out in

15:14

Newark seeing a few

15:17

months ago, that that becomes

15:19

super cheap for kids in the

15:21

inner city, not just wealthy

15:24

kids. I've never

15:26

seen such intense competition. It's

15:29

the same way as the internet in 2000, 2001. The

15:35

failure rate will be unbelievable

15:37

because they're sort of a, oh, it's an

15:39

AI thing, give it a high valuation. So

15:42

consumers will be the

15:44

primary beneficiaries. Now, if

15:46

you ever get to the point where one

15:48

company is not the other company's out, then

15:51

fine, the competition authorities can come in. But

15:53

we're in no, we're not even

15:55

close to that. Google is still

15:58

extremely capable in this. open

16:01

AI plus Microsoft. There's

16:04

Elon's got XAI, you've

16:06

got Entropic, at least

16:08

15 companies that aren't that far from the

16:14

state of the art who are lowering

16:16

prices. They're investing way

16:18

above the revenue level. So

16:21

this is the formula for the

16:23

benefits to flow to society. Okay,

16:25

we're going to rush this. But what's very quickly

16:27

your biggest worry, and your

16:29

biggest benefit right now? Well,

16:32

the biggest benefit is

16:34

that I think this technology, and

16:36

this is my primary engagement with it, we

16:39

can get it to the

16:43

inner city, we can get it to

16:46

Africa within the

16:48

same timeframe that it gets to

16:50

the wealthy. Unlike any other technology

16:52

in history, this one, I think

16:54

we can get it out on

16:57

an equity basis. And your worry? My

17:00

worry is just that this

17:02

is the most

17:04

unbounded thing. This is

17:06

not just a tractor, you

17:09

know, obsoleting farmers, where you say,

17:11

okay, it turns out there are

17:13

many other unmet needs there. This,

17:16

you know, which we thought would first

17:18

come for blue collar work through robots,

17:20

you know, the surprise was that with

17:23

this language facility, it

17:25

actually coming first for white

17:27

collar jobs, but the blue collar thing

17:30

is happening. Robotics. Just

17:32

a little bit later. Right. You

17:35

know, certainly within the next three, four, five

17:37

years. And so it's

17:40

so disruptive at a time

17:43

where government, who you expect to take

17:45

the excess productivity and, you know, spread

17:47

it around in a fair way, reduce

17:50

the work week, our general trust in

17:53

the capacity of government to do complex

17:55

things is pretty low. Yeah. So

17:58

it was a good run, guys. So. Congratulations.

18:00

All right, let's roll the next clip,

18:02

which is an area I

18:04

really spent a lot of time. Do

18:06

you find yourself even at this

18:08

age using your phone and staying on social

18:10

media more than you want to? Oh my

18:13

gosh, yeah. TikTok is so addictive. I'm on it

18:15

like all the time. And have you

18:17

ever run into crazy misinformation about me?

18:20

Crazy misinformation about you all the time. I've

18:22

even had friends cut me off because of

18:24

these vaccine rumors, but I'm a public health

18:26

student at Stanford and I think that there

18:29

is just so much nuance on how do

18:31

you communicate like accurate public health information or

18:33

scientific data? I don't know. I

18:36

need to learn more because

18:39

I naively still believe that

18:42

digital communication can be a force to

18:44

bring us together to have reasonable debate.

18:47

I think one thing like you don't really understand

18:49

about online is like, it's not really like logic

18:51

and fact that went out. Like people want an

18:53

escape. They want to like laugh. They want an

18:55

engaging video. They want to be

18:58

taken away from boring reality. And so like

19:00

the most popular video of you online is

19:02

you literally trying to do the dab. Bill,

19:04

can you do the dab real quick? Damn

19:06

Bill. Or you jumping over

19:08

the chair. Is it true that you can leap over

19:10

a chair from a standing position? It

19:13

depends on the size of the chair. I'll cheat

19:15

a little bit. Yes.

19:18

Those are the most popular because people want to

19:20

escape from things. So I don't think fact and

19:22

reason always went out online. But the

19:24

thing about, you know, I make lots of

19:27

money from vaccines, it's even hard for me

19:29

to figure out where that comes from. It's

19:31

not like a political organization. It's just madness.

19:33

And who promotes that? I

19:35

think it's fear. I mean, everyone was stuck at

19:38

home during a pandemic. We're all scared for our

19:40

lives. No one really knows what to trust or

19:42

what to believe. So that's what our society does.

19:45

Okay. So can

19:47

you do the chair right now? No. A

19:50

smaller one. Yeah. Little

19:52

stool. I

19:54

can do a stool. Your daughter's very

19:56

wise. But most of the

19:58

stuff about you isn't funny. I

20:00

spent a lot of time telling people

20:02

you're not Satan, that you don't put

20:04

chips in people's brains. I spent

20:07

an inordinate amount of time doing that, which

20:09

is an unusual position for me to be in. But

20:12

let's talk a little bit about information.

20:14

You talk about Anthony Fauci, who has

20:16

had death threats. I just interviewed him

20:19

recently. One of the reasons government is

20:21

in the trouble is in is the polarization which is

20:23

fueled by online stuff. And

20:26

there's no question about it. I know they're trying to come

20:28

up with studies that it's not true, but a new study

20:30

just came out showing it is absolutely true.

20:32

The polarization has been further

20:34

impacted, especially around, we use

20:36

the word misinformation, disinformation, but it's

20:38

really propaganda, isn't it? That's really

20:40

what's happening. 1.2

20:43

billion views were

20:45

of Elon doing misinformation on

20:47

the platform he bought, which

20:49

he enjoys spew misinformation on. It's

20:52

actually the biggest purveyor of misinformation right

20:54

now. Can you

20:56

talk a little bit about this and the

20:58

worries you have, because it does directly impact

21:00

you yourself, which I'm not worried about you,

21:02

but everybody here

21:04

in this room? Well,

21:07

I didn't anticipate that

21:10

if you want to belong to a group, there

21:12

almost become certain beliefs.

21:16

You know, the

21:18

election was stolen. You know,

21:20

vaccines have negative effects. Right.

21:22

And recently, patients eating dogs.

21:24

It's a self-interested individual who

21:28

somehow gets royalties from vaccines.

21:30

I mean, you know, totally

21:32

false, provably wrong. I

21:35

want to track the position of

21:38

people. I don't know why I

21:40

do, but it's amazing. Because you're

21:42

Satan. But go ahead. But even

21:45

Satan doesn't need to know where people

21:47

are. It's where.

21:49

What does he do with it? So

21:53

that's it. Just

21:56

strange. And, you know, we always try to deal

21:58

with it with humor. As you say, I

22:00

have nothing overall to complain about, but the

22:03

idea that your sort of

22:05

group beliefs are reinforced

22:08

online, and so you

22:10

have developed a reality that

22:12

when people say, no, the election

22:14

was not stolen, you're

22:17

like, no, that would be almost denying that

22:19

I'm part of this group, and so I'm

22:21

gonna behave that way. It's

22:25

incredibly scary

22:28

because it's definitely putting

22:30

us into separate

22:32

camps, and it's hard

22:34

to see. People say, oh, we didn't

22:36

regulate social media properly. Well, do

22:40

we know now? Are we regulating

22:42

it today? Is there a clear understanding of it?

22:44

And then, of course, AI that

22:46

we just discussed, if anything, supercharges

22:49

the ability to create

22:52

credible misinformation. What would you

22:54

do now to stop this? Because I think people

22:56

are in their own separate, and Microsoft was an

22:58

early investor in Facebook when it was a $15

23:00

billion valuation. Nice one, that was a

23:02

good one. I

23:04

doubt at that, I'm sorry, I was wrong. But

23:07

when you think about what should be done

23:09

now, because if people are in their own

23:11

information bubbles in a way that's profound, and

23:14

then AI, for example, can supercharge it, as

23:16

you say, what would be

23:18

the solutions to avoiding that? Well,

23:21

most things where the country

23:23

has been off track, you start to

23:25

see the harms, and then

23:28

people self-correct, parents

23:31

play a role, educators play

23:34

a role, and

23:36

some degree of banning the extreme

23:38

behavior plays a role. But

23:40

first, we have to come to a view that,

23:42

okay, this really is a problem. The

23:45

United States may not be the first place to

23:47

get this under the control. We have the First Amendment, and

23:49

our division and

23:54

our divisiveness

23:57

is particularly high. I

24:00

wish I could say, okay, such and such

24:02

a country has done this

24:04

extremely well. The

24:06

country that actually controls craziness on

24:09

the internet is China, but

24:11

they do it in a way that

24:14

destroys- They do like to track

24:16

everybody where they're going. Yeah, and

24:18

they don't let crazy things out there. They

24:22

do better, but at the loss

24:24

of democratic freedoms.

24:27

So what do you do in a

24:29

situation when, say you have someone like

24:31

Elon who's suddenly decided to let misinformation

24:33

flow toxic waste all over

24:35

the place. And in some cases, people

24:37

who try harder, like a Mark Zuckerberg,

24:39

who you were a mentor to him,

24:42

he and I had a big argument about Holocaust

24:44

deniers a couple of years ago, and I kept

24:47

saying, this is gonna lead to antisemitism down the

24:49

line. And he said, well, we're not gonna regulate

24:51

them at all. We'll see what happens. And you

24:53

can see what happens when you anticipate. So is

24:56

there anything government can do, or

24:58

are we at the mercy of these companies

25:00

to decide? Because

25:02

a few years later, Mark did clamp down

25:04

on Holocaust deniers, but it took him to

25:06

do it, which is problematic, I think. Well,

25:09

the question is, do you create some level

25:11

of liability for these companies? Correct. And

25:14

many forms of that would essentially

25:16

mean social media companies would

25:19

go out of business. But a little

25:21

bit, I think we ought to edge

25:23

in the direction of

25:26

forcing them to take some

25:28

more responsibility. It

25:31

is very tricky because if somebody says, vaccines

25:34

in general kill people, that's

25:37

wrong, and it caused older

25:39

people who needed COVID vaccines not to take

25:42

them. If people say, hey, vaccines sometimes have

25:44

side effects, that's

25:46

true. We need to talk to people

25:48

about the net benefit and how we avoid

25:50

those side effects and things. So

25:52

the exact dividing line, where all

25:55

of a sudden some AI wakes up and shuts

25:57

it down. or

26:00

labels it. I

26:02

don't even feel like we're trying to find that, that

26:05

happy medium. Who is responsible? Is it

26:07

the parents? Like California is banning different

26:09

things, different states are doing. Where is

26:11

the line? Because it can't be

26:14

Elon Musk and Mark Zuckerberg. It just can't be.

26:16

Well, nobody's really, there hasn't been much

26:18

regulation. You know, taking young people getting

26:20

online, that's not an enforced thing. So

26:23

I'm a little surprised how hands off

26:28

we've been on these things. I mean,

26:31

we over-regulate them offline and we under-regulate

26:33

them online. It's really quick. No, I

26:35

thought anxious generation and many observations

26:37

along those lines are very

26:39

profound and we should take action

26:41

on it. So if you could do one thing, what would

26:43

it be? I know what I would do, but what would

26:46

you do? Well, misinformation actually is the one topic and

26:48

I say it in the series, that

26:51

I say, hey, young people,

26:53

you grew up with this thing. You understand

26:55

the phenomenon better. We

26:58

basically pass this as a big problem

27:00

to you. In AI,

27:02

I have thoughts, global health, I

27:04

have thoughts, but misinformation, I'm

27:07

just stunned that there

27:10

aren't more clear, constructive things

27:12

relating to policy and technology.

27:15

Yeah, so you're just like, good luck, good luck,

27:17

young people. Actually, the way my

27:19

kids dealt with it is they took all of social media

27:21

off their phones, which I thought worked rather well. We'll

27:25

be back in a minute. is

28:00

a subscription service that removes your personal information

28:02

from the largest people search databases on the

28:04

web, and in the process, helps prevent potential

28:06

ID theft, doxing, and phishing scams. I have

28:09

really enjoyed Delete.me, largely because I'm a pretty

28:11

good person about tech things, and I have

28:13

a pretty good sense of privacy in pushing

28:15

all the correct buttons, but I was very

28:17

surprised by how much data was out there

28:20

about me. Take control of your data and

28:22

keep your private life private by signing up

28:24

for Delete.me. Now at a special discount to

28:26

our listeners today, get 20% off your Delete.me

28:29

plan when you go to joindelete.com/Cara, and

28:31

use the promo code Cara

28:33

at checkout. The only way

28:36

to get 20% off is

28:38

to go to joindelete.com/Cara, enter

28:40

the code Cara at checkout.

28:42

That's joindelete.com/Cara, code Cara. Support

28:47

for this show comes from quints. For some

28:49

people, the shift from summer to fall means

28:52

pumpkin spice lattes. For others, it means swapping

28:54

out your shorts and flip flops for sweaters

28:56

and other cozy clothes. If you're in that

28:58

latter category, you might wanna

29:00

check out Quints. Quints offers luxury

29:02

clothing essentials at reasonable prices. You can

29:05

find seasonal must-haves like Mongolian cashmere

29:07

sweaters for $60 and

29:09

comfortable pants for any occasion. Plus

29:11

beautiful leather jackets, cotton cardigans, soft denim,

29:13

and so much more. All

29:15

Quints items are priced 50 to 80% less than

29:18

similar brands because Quints partners directly with

29:21

top factories, cutting the cost of the

29:23

middleman. I love my Quints order.

29:25

It was easy to do, it came right

29:28

away. I actually bought a piece of luggage

29:30

to put all those clothes in, and it's

29:32

a really great suitcase and at much less

29:34

of the price than similar hard-shelled suitcases, and

29:36

I really love it. Get

29:38

cozy in Quints' high-quality wardrobe essentials.

29:41

Also, go to quints.com/Cara for free

29:43

shipping on your order and 365-day

29:45

returns. That's

29:48

q-u-i-n-c-e.com/Cara to get free shipping

29:50

and 365-day returns. quints.com/Cara.

30:02

Support for this show comes from Janus

30:04

Henderson Investors. When it

30:07

comes to learning a new skill, there are no

30:09

stupid questions and investing is definitely a skill. So

30:11

if you ever wondered how your money could

30:13

be doing more, but you're not interested in

30:15

paying a ton for investment advice, then Janus

30:17

Henderson could be a good place to start.

30:20

Wherever you are on your investment journey, Janus

30:23

Henderson Investors want to help you out. They

30:25

offer free personalized investment advice to help

30:28

you evaluate your investment strategy depending on

30:30

your needs, risk tolerance and other factors.

30:32

All you need to do is just ask. With

30:35

Janus Henderson, you get access to over

30:37

90 years of world-class investment expertise. They

30:40

say their goal is to get you feeling

30:42

more confident about your financial present and future.

30:45

To find

30:47

out more,

30:50

visit janushenderson.com/advice,

30:52

spelled janushenderson.com/advice.

30:55

Janus Henderson, investing in a brighter

30:57

future together. There are

30:59

no additional costs for advice beyond the

31:02

underlying fund expenses. Okay,

31:06

next one. Let's go to the next one.

31:08

We're moving on very quickly into climate change.

31:11

Another happy topic. If you think of our

31:13

addiction to fossil fuels and the way that

31:15

we're going about attempting to wean off of

31:17

it, we're losing. The fossil fuel industry is

31:20

making record profits. Emissions are

31:22

going up. People are right to

31:24

be incredibly skeptical and disillusioned. There's

31:26

part of the movement that I don't

31:29

fully agree with, which is that

31:32

you denigrate the current way of

31:34

doing things before we have a

31:36

replacement. I wish there was

31:38

as much emphasis on the new thing, but

31:42

I'm an optimist and I think

31:44

we will limit

31:46

temperature increase. So this

31:48

is something you spent a lot of time on. That's

31:51

what our last interview was about. You seem worried at

31:53

the time of where it's going, the temperature increases. Obviously,

31:55

we have another hurricane in Florida, and

31:57

yet legislators are pretending climate change doesn't exist.

32:00

doesn't exist. Talk a little

32:02

bit about where we are right now. And

32:04

one thing that you talked about a lot,

32:06

which I've been spending a lot of time

32:08

studying, is nuclear energy. Microsoft just recently is

32:11

possibly going to open Three Mile Island, which

32:13

I'm like, AI, Three Mile Island, great. Seems

32:15

like it could go well. But actually, it

32:17

could. It could actually go well in order

32:20

to be in compute power for AI. Talk

32:23

a little bit about where we are now, because

32:26

it's really, it seems

32:28

to be accelerating. And young people are

32:30

very worried. Again, another bag of crap we've

32:33

handed them in this regard. Well,

32:36

the key to solving

32:38

climate change, I wrote a book with

32:40

a theory of change that

32:43

has to do with replacing

32:45

all emitting activities for goods

32:47

and services with new

32:50

approaches that are green, that have

32:52

no emissions. Today, those

32:54

green approaches are extremely expensive, green

32:56

cement, green aviation fuel. You talked

32:59

about the green premium. And

33:02

only by funding

33:04

innovation, having some degree

33:07

of tax credits and

33:09

buyers willing to pay extra, then

33:12

as you scale up, those costs come down and

33:14

you get the magic that says you can even

33:16

go to a middle income country like

33:18

India and say, okay, here's this new way to

33:20

make cement. It does not

33:22

cost more. And

33:24

so in 2015, when the

33:27

Paris Accord is signed, I

33:29

commit to create Breakthrough Energy. I'm

33:31

there with country leaders, Modi, Obama,

33:33

and they commit to double energy

33:35

R&D budgets, which

33:37

they did to a small degree,

33:39

they didn't get to that doubling.

33:41

But now Breakthrough

33:43

Energy and other investors are funding

33:47

unbelievable innovation, new ways to

33:50

make steel, cement, meat, clothing,

33:52

you name it. And

33:55

although those won't roll out in time

33:58

on a global basis, get

34:00

a two degree target, they

34:02

will roll out and they'll avoid us getting

34:04

to extreme warming.

34:07

The sad thing about climate change right

34:09

now is people, given how much

34:11

we'll be able to limit it, they

34:14

overestimate the impact of climate change

34:16

on rich countries. Rich countries

34:18

have air conditioning. We can change our

34:20

crops. We have savings. The

34:23

big losses are near

34:25

the equator where you have

34:27

poor people who depend on

34:29

agriculture. When you hurt

34:31

agriculture, you cause malnutrition, which causes

34:34

a lot more death. The

34:37

picture of

34:39

the suffering and the need for climate adaptation

34:41

should lead to us being more

34:43

generous to these countries at a

34:45

time where, because of

34:47

other things, we are actually being significantly

34:50

less generous to the poor countries. So one

34:52

of the things you talked about

34:54

is nuclear as a solution, correct?

34:57

Absolutely. Nuclear fission and

34:59

or fusion will be very,

35:02

very important along with renewable

35:04

sources. You said renewables aren't enough.

35:06

I mean, you're quite strict. Renewable alone

35:09

is not enough because of

35:11

the intermittency. Japan will

35:13

not be powered by renewable energy. South

35:15

Korea won't be powered by renewable energy.

35:17

When you have a cold snap and

35:20

that cold front is sitting there, no

35:22

wind, no solar, people still want their

35:25

houses not to be subzero. Right.

35:28

So talk a little bit because there's a bunch

35:30

of people, including Sam Altman of OpenAI who are

35:32

working at Helion. I

35:35

just was with someone who was talking about how you're going

35:37

to have a small nuclear device in your house to

35:39

heat it. Everyone's going to have a small nuclear device. I'm

35:41

just telling you, I have to sit and listen.

35:43

You don't have to listen to these people. How

35:46

do you look, how does that roll out

35:48

given the reputation? I

35:50

mean, I know it's laughable, but Three Mile Island, you're

35:53

like, maybe not. But at the same

35:55

time, maybe. So how do you make that? How

35:57

is Mike is going to make that argument? Well.

36:00

You know, coal kills people when

36:02

it's mined, it kills people from local pollution,

36:04

natural gas pipelines blow up. I'm

36:08

not involved in third generation nuclear,

36:10

which is what resuscitating that plant

36:12

is. I am a

36:14

large funder of a fourth generation nuclear, which

36:16

is a plant with no high

36:19

pressure in it. Very

36:21

different design that everybody, just like

36:24

they said you should recover rocket stages to

36:26

make space flight cheap. They've always said you

36:29

should use metal cooling because all these safety

36:31

issues of what happens when you shut the

36:33

reactor down are completely solved. It's a very

36:35

simple design, and we

36:37

should be able to do it for about a third of

36:40

the cost of current reactors. So, you

36:42

know, we're pursuing that dream. Everything

36:44

about nuclear fission and fusion, people have

36:46

a great deal of skepticism of will

36:49

it be cheap enough, and what will

36:51

the safety look like? But, you know,

36:54

I'm putting billions into it

36:56

because I'm quite confident we can

36:58

make that case. So what do

37:00

you say to young people like this who we still

37:02

are dependent on fossil fuels? Well,

37:05

the banning fossil fuels

37:07

before you have a substitute is

37:10

a way of getting governments

37:13

elected who don't think climate change

37:15

exists. You

37:17

know, if you just

37:19

say, no, you can't drive to work. I mean,

37:22

so why

37:25

do they go to universities and say don't invest

37:27

in the existing? Why don't they go and say,

37:29

please take your money and invest in these

37:32

new approaches? Because if you take your money

37:34

away from the existing stuff, that doesn't create

37:36

a solution. You know, when I was in

37:38

2015 in the climate crisis, I'm like, you

37:41

guys are making a bunch of pledges. What's

37:43

your plan for steel? There were

37:45

zero startups about making steel

37:47

a new way, 6%

37:50

of mission, cement, also 6%. No

37:52

work going on. So if you

37:54

want to out-compete the dirty

37:57

stuff, you actually have to get

37:59

the entrepreneur. You have to inspire them,

38:01

you have to have high-risk capital. And

38:04

so a lot of the things, I love

38:06

the activists because the issue is a huge

38:08

issue and they deserve all the credit for

38:12

keeping it there. But when they

38:14

say the solution is to

38:16

stop consuming, that

38:18

means that can India build basic shelter?

38:20

So we're not going to stop using

38:24

cement, a lot of cement, even if

38:26

rich countries didn't build another building ever.

38:29

It's a rounding error. This is a middle-income

38:32

country problem of providing

38:34

basic lifestyle-level

38:38

goods and services.

38:41

And so it's an invention problem. Now

38:43

I say that about everything, but in

38:45

this case it's correct. All

38:47

right. Is there anything really unusual you've invested in? And

38:49

then we're going to move on to the next one.

38:52

Well, there's so many cool things. What's the weirdest

38:54

energy thing? We have 130 companies that

38:56

Breakthrough Energy's funded. We

38:59

have new ways of making food. We

39:02

have ways of making cows not emit

39:05

natural gas. The idea that there's

39:07

hydrogen that you can dig, geologic

39:09

hydrogen, that might surprise

39:11

people and

39:14

that's going to be a huge help to solve this.

39:17

Yeah, I'm going in a hydrogen-powered plane soon. You want to

39:19

come? Wow. Yeah, you

39:22

want to come? Anyway,

39:24

yes, I'm funding a lot of that stuff.

39:27

I hope it works. Yeah. Each

39:30

one of my four children are like, no. And

39:32

I said, yes, I'm getting in it. Anyway,

39:34

let's go to the next thing. Are people too

39:37

rich? Speaking of that, all right. Under

39:40

the tax system I would go for, the

39:42

wealthy would have, say, a third

39:45

as much. Well, needless to say, I would go

39:47

a lot further. And

39:49

I think, you know, and your friend Warren Buffett

39:51

makes the point that his

39:53

effective tax rate is

39:55

lower than his secretary's. And

39:58

that is not what the American people want to see. They

40:00

do want to see the wealthy pay their fair share

40:02

of taxes, but you have a

40:04

political system which, unfortunately, represents the

40:06

needs of the wealthy much more than

40:09

the needs of ordinary Americans. You know,

40:11

they do these happiness questionnaires. Have you

40:13

seen that? You know, oh, yeah. What

40:15

they find is countries where people have

40:18

that economic security, as in Scandinavia, usually

40:20

Denmark or Finland or somebody ranks

40:22

at the very top. Why is that? I

40:25

think it's because people don't have to deal

40:27

with the stress about how they're going to

40:29

feed their kids or provide health care or

40:31

trial care. If you take that level of

40:33

economic stress, if I say

40:35

to somebody, you're never going to have to worry about whether you're

40:37

going to feed your family, whether your

40:39

kids are going to have health care. Thank you.

40:42

Is it going to

40:44

make their life perfect? No.

40:46

Will it ease their stress level, bring

40:49

more happiness? Security, I think it will. Well,

40:53

kudos. You did talk to Bernie. I

40:56

remember when we had Elizabeth Warren, I think

40:58

one of the years you're a code, everybody,

41:02

especially men in the audience, you could feel them

41:04

seize up when she was talking about these kind

41:07

of things, the rich paying

41:09

more taxes. Kamala Harris, Vice

41:12

President Harris, talked about it last night in an interview.

41:14

Fair share, nothing against capitalism,

41:17

but everything's out of whack. And

41:19

actually, corporate tax rates are at the lowest

41:21

since 1939 right now. Well,

41:25

people like me pay 40

41:27

some percent in taxes. I

41:30

know, I know, I need better lawyers. I need your lawyers. So

41:34

tell me, talk about this and why did you want

41:36

to address it? Because you've talked

41:38

to Mark Cuban, I've talked to him a

41:40

lot about the wealth tax and things like

41:42

that. So how do you deal with income

41:45

inequality? Because I feel like it's fueling a

41:47

lot of the division that we find ourselves

41:49

in. Well, the idea that

41:51

you have the opportunity to

41:54

create a company that's very valuable, the

41:56

US is the envy of the world at that.

42:00

So, well, I

42:02

would set tax rates quite a bit higher

42:04

for rich people. We

42:08

still have to grow the economy

42:11

to get to the ideal level

42:13

to set the safety net as

42:15

high as Bernie alludes to. As you

42:17

get richer, you raise the safety net.

42:21

That's the story of the United States. The

42:24

government's not very good at executing, so it's

42:27

always imperfect. But

42:30

I would not make it illegal to be

42:32

a billionaire. So that's a

42:34

point of view. He

42:37

would take away over 99% of what I have. I

42:43

would take away 62% of what I have. So

42:46

that's a difference. You

42:49

definitely do get to the point where

42:53

you're killing the goose that lays the

42:55

golden egg. North

42:58

Korea, very equal. Unbelievable

43:00

equality. So I

43:03

don't even like the equality framing because 100

43:06

years ago, most people were never literate. So

43:10

we've created wealth, and I think

43:12

that the system that does that has a

43:14

few elements that we shouldn't throw out. So

43:17

clearly, being capitalist,

43:19

being an entrepreneur, giving people incentives to do

43:21

things. So what do you do when people

43:24

have, they were just talking about who's going

43:26

to be the world's first trillionaire, which is

43:29

astonishing to think about. Well there's no

43:31

trillionaires. Not yet. I don't think there will

43:33

be. You don't think there will be. No. They

43:36

thought it would be, wasn't you? But I think you're

43:38

okay again. I remember meeting

43:40

with someone who was a billionaire, and

43:42

I said, this was 10 years ago, I said,

43:44

you have to do something about income inequality, or

43:46

you're going to have to armor plate your Tesla.

43:49

And then I looked at them and I realized they

43:51

kind of wanted to armor plate their Tesla. They wanted

43:53

to live in that world. And how do you deal

43:56

with that on a real level when you do have

43:58

this insane amount of wealth and people

44:00

still in abject poverty. Where

44:03

do you come together with someone like Bernie

44:05

Sanders? Well, abject poverty is- Different,

44:09

right? Is in Africa, there

44:12

is abject poverty. So, you

44:14

know, I give tens of billions

44:16

to Africa to relieve abject poverty,

44:18

and I encourage others to

44:20

do the same. It's

44:22

not a very big club of people

44:26

involved in that. My dad

44:29

is deceased, but he

44:32

and I worked on promoting

44:35

the estate tax. I'm a huge believer

44:37

in the estate tax. I continued to

44:39

promote that. They actually got

44:41

rid of the estate tax briefly,

44:45

and I think that's a mistake, because

44:47

those are dynastic fortunes, not somebody

44:51

who actually created something. And so

44:53

I think we, and it's stunning

44:55

to me how countries

44:57

don't have an estate tax. China does not

45:00

have an estate tax. You

45:02

know, Europe has very limited estate

45:04

tax. So, you know,

45:07

I think we should have

45:09

higher taxation on the rich, but not

45:11

that would prevent you from having large

45:13

fortune. I wouldn't set a ceiling. And

45:16

then I think for once you pay

45:18

those taxes, whatever's left over,

45:21

you should engage in philanthropy. You should

45:23

take the skill that allowed you to

45:25

succeed in business. You know,

45:28

hiring people, think through scientific organization,

45:31

and give it away. How hard is that to

45:33

do right now? There's not a ton of people

45:35

following in your footsteps, I would say, some. You

45:38

know, we have people who are giving a lot of way. And,

45:44

you know, in the Giving Pledge, you

45:46

can look there are people who are

45:48

giving at a

45:51

pretty good rate. You know, I think people should do

45:53

more. I think they would enjoy giving

45:55

more. So when you,

45:58

what you're talking about is the idea. that the

46:00

middle class feels very squeezed. This is a topic

46:02

in this election. Trump wants

46:04

to give more tax breaks to the wealthy.

46:07

Kamala Harris is talking about giving tax breaks

46:09

to the middle class. Where do you think,

46:13

if you were running for president, what

46:15

would be your stand on this? Well,

46:18

I wouldn't get elected. I

46:21

would bring up the deficit and say that, yes,

46:24

we can tax the rich a lot more, but

46:26

even so, the 2017 tax

46:29

cut, a

46:31

lot of that's gonna have to expire because the

46:34

deficits will lead to a level

46:36

of inflation that voters will not be

46:38

happy with. There's a huge lag in this,

46:41

but nobody talks about

46:43

the deficit. I would raise the safety

46:46

net somewhat, but I would also,

46:49

for future generations, reduce the deficit

46:52

quite a bit. So 62% of

46:54

you, you would keep 62%? We can

46:57

have the rest. We can have, you would

46:59

keep 38? Yeah. And

47:01

we can have the rest? If you had the

47:03

tax system I have in mind, if that

47:05

had been in place throughout my entire life,

47:08

I would have about 38% of what I have. All

47:11

right, we'll take it. All

47:13

right, last one, episode five,

47:16

can we outsmart diseases? Your

47:18

biggest topic. When you sit

47:20

in those wards, you

47:22

just see how frantic things are because the

47:25

wards are never adequately staffed

47:28

because malaria is quite seasonal. It's

47:30

as the rainy season comes and

47:33

the mosquito population grows exponentially.

47:41

As a kid, I can't even tell you

47:44

how many episodes of malaria I went through.

47:47

I can still clearly see the

47:49

picture of my father. He was standing

47:51

next to my bed, looking

47:53

at me. I could really see

47:56

a lot of ears and eyes. If

48:00

malaria was killing 600,000 people in the US or

48:02

in Europe, the

48:07

problem would have completely changed by now. So

48:09

malaria was one of the areas you focused

48:11

on most strongly. Talk about where it is

48:13

right now. I don't remember you let mosquitoes

48:16

out at TED, as I recall, which you're not

48:18

gonna do here. But

48:20

where is it right now from your

48:22

perspective in these worldwide diseases? Still, it's

48:25

not neglected anymore because of you and

48:27

the Gates Foundation, but where is that

48:29

and what are the diseases you're looking

48:32

at? Yeah, so malaria at

48:35

the turn of the century, when

48:37

the Gates Foundation is created, is killing a bit

48:40

over a million a year. And

48:44

over the first 15 years of

48:46

this century, we got it down to about

48:50

a half million. And it's gone up slightly

48:53

from there, but just say a half million a year.

48:56

This is a disease that there's

48:58

almost no funding because the disease

49:00

is in the poor countries who

49:03

don't have the resources and the rich

49:05

countries just aren't involved.

49:09

They don't see it as a problem. We

49:12

do have in the pipeline some incredible tools.

49:14

So that gentleman you met there, Diabardi, is

49:18

a scientist in Burkina Faso who

49:20

has tools to kill

49:23

mosquitoes. And

49:26

we're going through a lot of

49:28

experiments based on his work and others.

49:31

And within three to five years, this

49:33

tool will be ready for release. And

49:35

so if we get a surge in

49:37

funding, then we could

49:39

start the effort to eradicate

49:42

malaria. By killing mosquitoes.

49:44

Yeah, so what you have to do is get rid

49:46

of 90% of the

49:49

mosquito population. And so the reinfection rate,

49:51

you slow it down enough that you

49:53

can test and treat. And if you

49:55

go through a bunch of low seasons

49:57

where you've done that, This

50:00

is what happened in the US. We

50:02

had a lot of malaria, but

50:04

at the time, you could spray

50:07

DDT on the ponds, and that

50:09

decimated mosquito populations. And so because

50:11

of winter's low seasons, we actually

50:13

got to zero. We're trying to

50:15

create the equivalent in

50:17

places like Nigeria, where

50:20

as a child, you have a

50:24

one in six chance of dying before the age

50:26

of five. So when you think about

50:28

this, is it continues to

50:30

be the most important disease you're fighting right

50:33

now, malaria? Or are there others that you're...

50:36

It's hard to rate. TB

50:38

kills the most people of

50:41

any disease. Malnutrition

50:44

is the one thing if I had a wand,

50:47

I would get rid of, because if you're

50:49

not malnourished, you're less than half as likely

50:51

to die, even if you get malaria, diarrhea,

50:53

or pneumonia, and it cripples you for life,

50:56

because you can never catch up if your

50:58

brain doesn't develop when you're young. Sickle

51:02

cell disease is this evil disease.

51:06

We have a $2 million cure, but

51:09

the foundation is working to make it a $200 cure,

51:11

and that means

51:13

we could take it to Africa, where you

51:15

have millions of kids with sickle cell versus

51:19

60,000 in the US, and every one is a tragedy.

51:22

So those are the ones you're focused on, because another

51:24

one in this country at least is heart disease and

51:26

obesity, of course, which is sort of the opposite. That's

51:28

right. We live to a

51:30

very old age. We'll

51:34

be back in a minute. Support

51:42

for this show comes from Fetch Pet Insurance.

51:45

You all know I love my cat, Lovely. If

51:47

you don't, let me fill you in. Lovely is

51:49

a very needy cat right now, because we're living

51:51

in an apartment while we renovate our house. She's

51:54

very quirky. She's a tortie. And one

51:56

of my favorite stories is how much she is

51:58

the mayor of the neighborhood. outside at

52:00

our house and she sort of greets everyone as

52:02

they walk by and I always get a call

52:05

is your cat lost I'm like no just leave

52:07

her alone she just wants to meet everybody around.

52:09

She also has a boyfriend next door of our

52:11

neighbors and she ignores him all the time it's

52:13

a great relationship. Our family wouldn't

52:16

be complete without our animals and if you're

52:18

a pet parent the last thing you want

52:20

in life is have to choose between your

52:22

pets health and a devastating vet bill so

52:24

if you're looking for pet insurance consider Fetch.

52:26

Fetch pet insurance covers up to 90% of

52:28

unexpected vet bills including what other providers charge

52:30

extra for or don't cover at all

52:32

things like behavioral therapy, sick visit, exam

52:35

fees, supplements and other alternative or holistic

52:37

care. Fetch even covers acupuncture.

52:39

With Fetch you can use any vet

52:41

in the US or Canada. Bottom line

52:43

Fetch covers more, more protection, more savings,

52:45

more love. With Fetch more is more.

52:48

Get your free quote today

52:51

at fetchpet.com/Cara that's fetchpet.com/Cara fetchpet.com/Cara

52:53

and go get yourself a

52:55

pet if you don't have

52:58

a pet you're not as

53:00

happy as you could be. Support

53:04

for this show comes from Amazon Business. We

53:07

could all use more time. Amazon

53:09

Business offers smart business buying solutions so

53:11

you can spend more time growing your

53:13

business and less time doing the admin.

53:16

I can see why they call it smart. Learn

53:18

more about smart business buying at amazonbusiness.com What

53:25

does impactful marketing look like in today's day and

53:27

age? And if you're a marketing professional what decisions

53:29

can you make today that will have a lasting

53:31

impact for your business tomorrow? We've

53:34

got answers to all of the above and

53:36

more on the Prop G podcast. Right now

53:38

we're running a two-part special series all about

53:40

the future of marketing. It's time

53:42

to cut through the noise and make a

53:44

real impact so tune in to the future

53:46

of marketing a special series from the Prop

53:49

G podcast sponsored by Canva. You

53:51

can find it on the Prop G feed wherever you

53:53

get your podcasts. about

54:01

how you outsmart disease. You've been at this

54:03

for what? It's not 20 years, right? Yeah,

54:06

no, the foundation is created

54:08

and becomes the largest at the turn

54:10

of the century. So

54:12

what are you most

54:14

focused on as you move into the new

54:16

phase of what you're doing still malaria and

54:19

TB and these diseases? Health broadly, we

54:21

work in agriculture because that

54:24

we can make eggs and

54:26

milk really cheap. We can have crops that deal

54:28

with climate change. A lot of that's climate adaptation

54:31

related, but global

54:33

health, all of the

54:36

resources I have will

54:38

go to these global health issues. And

54:40

we ought to be able to achieve

54:43

in my lifetime, we're almost done

54:46

with polio. We should be

54:48

able to get rid of measles, malaria, almost

54:51

all of malnutrition. So it's actually very

54:53

exciting work. It's very hopeful. We've

54:56

come a long ways from 10 million

54:58

a year to now 5 million. But

55:01

to get down to that 1%, we

55:05

have to cut it in half three more times. So

55:07

what's next for what's next? What are you going

55:09

space travel? Are you going on Mars with Elon?

55:12

Nope. What are you

55:14

gonna do more of these? I

55:19

think we'll go three, four, five years

55:21

and see. What

55:24

interests you, these are big topics,

55:26

but is there, again,

55:28

space travel is one, cloning?

55:32

Well, genetic editing and being tasteful

55:34

about how we use that, I

55:39

focus on that to cure sickle cell

55:42

at a very low cost or to

55:44

cure HIV at a very low cost.

55:46

So it's about the

55:48

diseases that make the world

55:51

incredibly inequitable. All right, last

55:53

question. Are you hopeful or

55:55

it's pretty tense right now, it's still, and

55:57

it's been tense for a couple of years.

56:00

out of COVID, we're still sort of not recovered

56:02

from that. And a lot of people

56:04

aren't. How do you, what is your mood

56:07

right now? Well,

56:10

you know, ask me on November 6th whether

56:15

climate change is real or not.

56:17

Right. So I'm guessing who

56:19

you're voting for, but... You

56:22

can definitely guess where

56:25

my energy is going. Overall, I'm still

56:27

an optimist. I mean, if you

56:30

zoom out and say, OK, where were we, you

56:32

know, 50 years ago, 100 years ago, you know,

56:36

humans are ingenious at

56:38

doing things. I

56:41

hope that the younger

56:43

generation can look at polarization,

56:45

look at the negative effects of

56:48

digital, including misinformation, but not only

56:51

misinformation and shaping

56:54

AI so that it's miraculous

56:57

capability in health and education

57:00

isn't canceled out by

57:03

disorder and

57:07

a lack of

57:09

purpose. So we have left some

57:11

real challenges for this next

57:14

generation. But, you know, I'm overall

57:16

very hopeful. I get to

57:18

see more innovation in other people, so I understand why

57:20

I would be more hopeful. There's a

57:23

lot, whether it's climate or health, that

57:26

is very, very exciting. Great. Well,

57:28

I have to say, it's very good. You're pretty good

57:30

at interviewing. I mean, I'm not going to lose my

57:33

day job, but nonetheless, you do a nice job. Anyway,

57:35

thank you so much, and thank you, Bill Gates. Thank

57:37

you. All right. Thanks. On

57:48

With Kara Swisher is produced by Christian

57:50

Castro-Rosell, Kateri Okum, Jolie Meyers and Meghan

57:52

Burney. Special thanks to Kate

57:55

Gallagher, Kalyn Lynch and Claire Hyman. Our

57:57

engineers are Rick Kwan, Fernando Arruda and

57:59

Alana Leah Jackson, and our theme

58:01

music is by Trackademics. If

58:04

you're already following the show, you get

58:06

to fly in a hydrogen powered plane

58:08

with me and Bill Gates, if he

58:10

dares. If not, Satan is indeed tracking

58:12

you. He's just not Bill Gates. Go

58:14

wherever you listen to podcasts, search for

58:16

On with Kara Swisher and hit follow.

58:18

Thanks for listening to On with Kara Swisher

58:21

from New York Magazine, the Vox Media Podcast

58:23

Network and us. We'll be back on Thursday

58:25

with more. Support

58:31

for this Support for this show

58:34

comes from Amazon Business. We

58:36

could all use more time. Amazon Business

58:38

offers smart business buying solutions, so you

58:40

can spend more time growing your business

58:43

and less time doing the admin. I

58:45

can see why they call it smart. Learn

58:48

more about smart business buying at

58:50

amazonbusiness.com. Artificial

58:54

intelligence, smart houses, electric

58:57

vehicles. So We are living in

58:59

the future. So why not

59:01

make 2024 the year you go

59:03

fully electric with Chevy? The

59:05

all-electric 2025 Equinox EV LT

59:08

starts around $34,995. Equinox EV, a vehicle you know,

59:10

value you'd expect Equinox EV,

59:12

a vehicle you know, value you'd expect,

59:14

and a dealer right down the street.

59:17

Go EV without changing a thing. Learn

59:20

more at chevy.com/Equinox EV.

59:24

The manufacturer's suggested retail price

59:26

excludes tax, title, license, dealer fees,

59:28

and optional equipment. Dealer sets

59:30

final price.

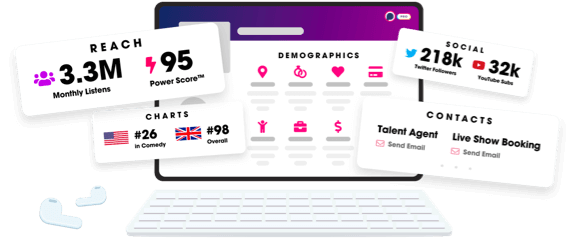

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us